Discover the high-level benefits of SAP Fiori with our in-depth guide. Explore its design principles, the SAP Fiori Apps Library, and how it integrates with existing systems to enhance business performance and user experience.

In today’s digital era, optimizing user experience is crucial for enhancing business processes and operational efficiency. SAP Fiori represents a significant advancement in this realm, offering a modern, role-based approach to user interfaces within SAP systems. This blog post provides a comprehensive overview of SAP Fiori from a strategic, space-level perspective, exploring its core functionalities, benefits, and integration possibilities. By understanding SAP Fiori at this high level, organizations can better appreciate how it transforms user interaction and boosts overall productivity.

At its core, SAP Fiori is a design framework that redefines the user experience for SAP applications. By integrating modern design principles with SAP’s powerful backend systems, SAP Fiori offers a streamlined, intuitive interface that significantly enhances usability. It focuses on delivering a consistent and responsive experience across various devices, whether on desktop, tablet, or mobile.

SAP Fiori is built on the principle of simplicity, aiming to simplify user interactions with complex SAP systems. It achieves this by providing role-based access, which ensures that users see only the information and functionalities relevant to their specific roles. This tailored approach not only improves efficiency but also reduces the learning curve associated with traditional SAP interfaces.

Exploring the SAP Fiori Apps Library: A Treasure Trove of Functionality

The SAP Fiori Apps Library is a central component of the SAP Fiori ecosystem, offering a comprehensive repository of pre-built applications designed to meet diverse business needs. This library categorizes applications into three main types: transactional apps, analytical apps, and fact sheets.

Transactional apps facilitate daily business tasks such as processing orders or managing inventory. They streamline these processes by offering a simplified and user-friendly interface. Analytical apps, on the other hand, provide insights and reports based on real-time data, enabling informed decision-making. Fact sheets offer detailed information on business objects, such as customers or products, giving users a comprehensive view of critical data.

By leveraging the SAP Fiori Apps Library, organizations can quickly identify and deploy applications that align with their business requirements. This library not only accelerates implementation but also ensures that users have access to the tools they need to perform their roles effectively.

Navigating the SAP Fiori Library: An Essential Guide

The SAP Fiori Library, accessible via the SAP Fiori Launchpad, serves as the gateway to exploring and managing SAP Fiori applications. This library provides a user-friendly interface that allows users to search for, explore, and launch various applications based on their roles and needs.

Users can browse through the SAP Fiori Library by categories or use the search functionality to find specific apps. Each application in the library is accompanied by detailed information, including its purpose, functionalities, and prerequisites. Administrators can also use the library to manage application deployment, configure settings, and monitor performance.

Effective navigation of the SAP Fiori Library is crucial for maximizing the benefits of SAP Fiori. By familiarizing themselves with the library’s features and functionalities, users and administrators can ensure a seamless experience and optimize their use of SAP Fiori applications.

The Role-Based Approach: Tailoring SAP Fiori to User Needs

A defining feature of SAP Fiori is its role-based approach, which tailors the user experience to specific roles within an organization. This approach ensures that users see only the information and functionalities relevant to their responsibilities, reducing complexity and improving efficiency.

For instance, a finance manager might have access to applications related to financial reporting and budget management, while a sales representative would see applications focused on sales orders and customer interactions. This targeted presentation of information helps users focus on their tasks without being overwhelmed by irrelevant data or features.

By adopting a role-based approach, SAP Fiori enhances productivity and streamlines workflows. Users can complete tasks more efficiently, and organizations can benefit from a more organized and user-centric interface.

SAP Fiori Login: Accessing Your Applications Securely

Accessing SAP Fiori applications involves a straightforward login process designed to ensure secure and personalized access. The SAP Fiori login page is the entry point to the SAP Fiori Launchpad, where users can access their assigned applications and services.

Users need to enter their credentials, including a username and password, to log in. Depending on the organization’s security protocols, additional authentication methods such as single sign-on (SSO) or two-factor authentication (2FA) may be required. Once logged in, users are directed to the SAP Fiori Launchpad, where they can access their role-specific applications and begin their tasks.

The SAP Fiori login process is designed to provide both security and convenience, ensuring that users can easily access their applications while maintaining the integrity of the system.

Design Principles of SAP Fiori: Enhancing User Experience

SAP Fiori’s design principles are foundational to its success in transforming user experiences. These principles include simplicity, coherence, and responsiveness, each contributing to a more effective and engaging interface.

Simplicity is about reducing complexity by presenting only the necessary information and actions. Coherence ensures a consistent look and feel across all applications, making it easier for users to navigate and use different apps. Responsiveness guarantees that applications work seamlessly across various devices and screen sizes.

By adhering to these design principles, SAP Fiori delivers a user experience that is both visually appealing and highly functional. This focus on user-centric design helps organizations achieve higher levels of user satisfaction and operational efficiency.

Integrating SAP Fiori with Existing Systems: Best Practices

Integrating SAP Fiori with existing SAP systems is a critical step in realizing its full potential. Effective integration ensures that SAP Fiori applications can interact seamlessly with other SAP modules and data sources, providing a unified user experience.

Organizations should work closely with their IT teams or SAP consultants to facilitate integration. This process may involve setting up interfaces, configuring data exchanges, and testing application performance. Successful integration allows organizations to leverage SAP Fiori’s capabilities fully, enhancing their overall SAP ecosystem.

Best practices for integration include thorough planning, comprehensive testing, and ongoing monitoring. By following these practices, organizations can ensure that their SAP Fiori implementation is smooth and effective.

Future Trends in SAP Fiori: What to Expect

As technology continues to evolve, so too does SAP Fiori. Future trends in SAP Fiori may include advancements in artificial intelligence (AI), machine learning, and advanced analytics. These innovations aim to further enhance user experience by providing intelligent recommendations, predictive insights, and automated workflows.

Additionally, SAP Fiori is likely to continue its focus on mobile and cloud-based solutions, enabling users to access applications and data from anywhere and on any device. Staying informed about these trends will help organizations remain competitive and fully leverage the capabilities of SAP Fiori.

Conclusion

SAP Fiori represents a significant leap forward in user experience design for SAP applications. By providing a modern, role-based, and responsive interface, SAP Fiori enhances productivity and user satisfaction. Understanding SAP Fiori from a high-level perspective helps organizations appreciate its transformative impact on business operations.

From exploring the SAP Fiori Apps Library to integrating with existing systems, SAP Fiori offers a range of features and benefits designed to optimize user interactions and streamline processes.

you may be interested in this blog here

Discover Joy Worksheet for Pre Primary English PDF Free Download

Getting the Best Salesforce Partner: A Tutorial for Business Growth

The post “A Strategic View of SAP Fiori: Insights from a Space Level Perspective” appeared first on TECHNICAL GYAN GURU.

I just had the chance to work on a SAP PI-based ARIBA to SAP connectivity. Without SAP PI, we can also integrate Ariba with SAP. However, we’ll examine how PI functions in the midst today.

I just had the chance to work on a SAP PI-based ARIBA to SAP connectivity. Without SAP PI, we can also integrate Ariba with SAP. However, we’ll examine how PI functions in the midst today.

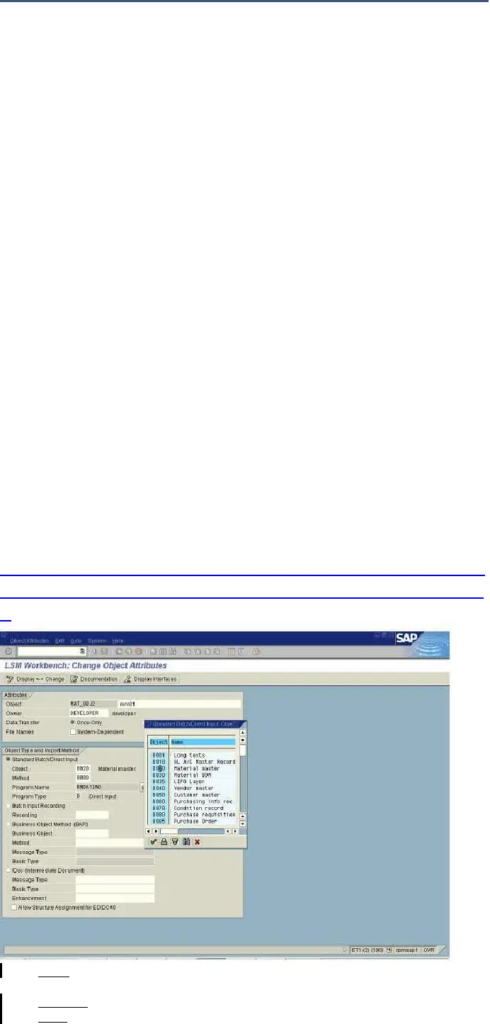

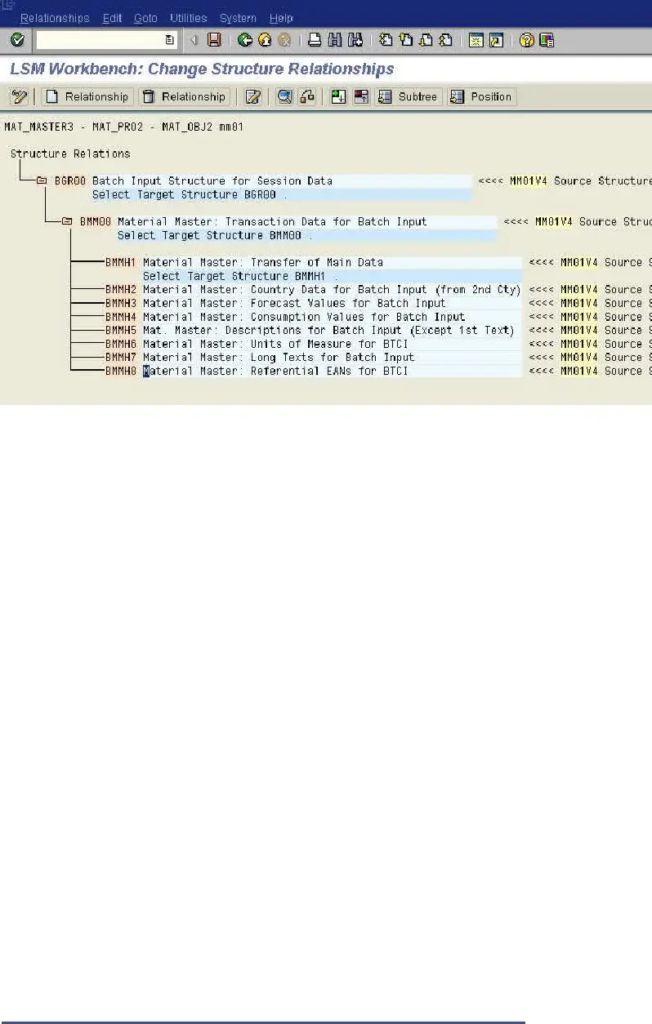

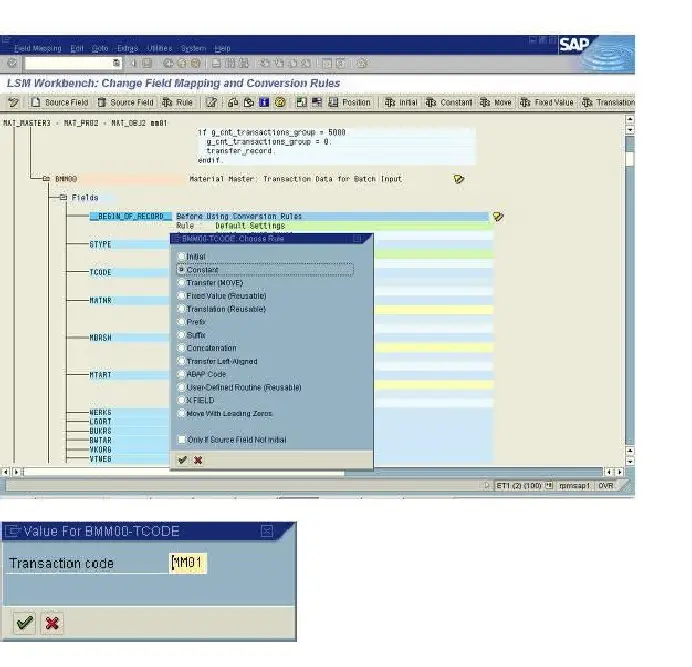

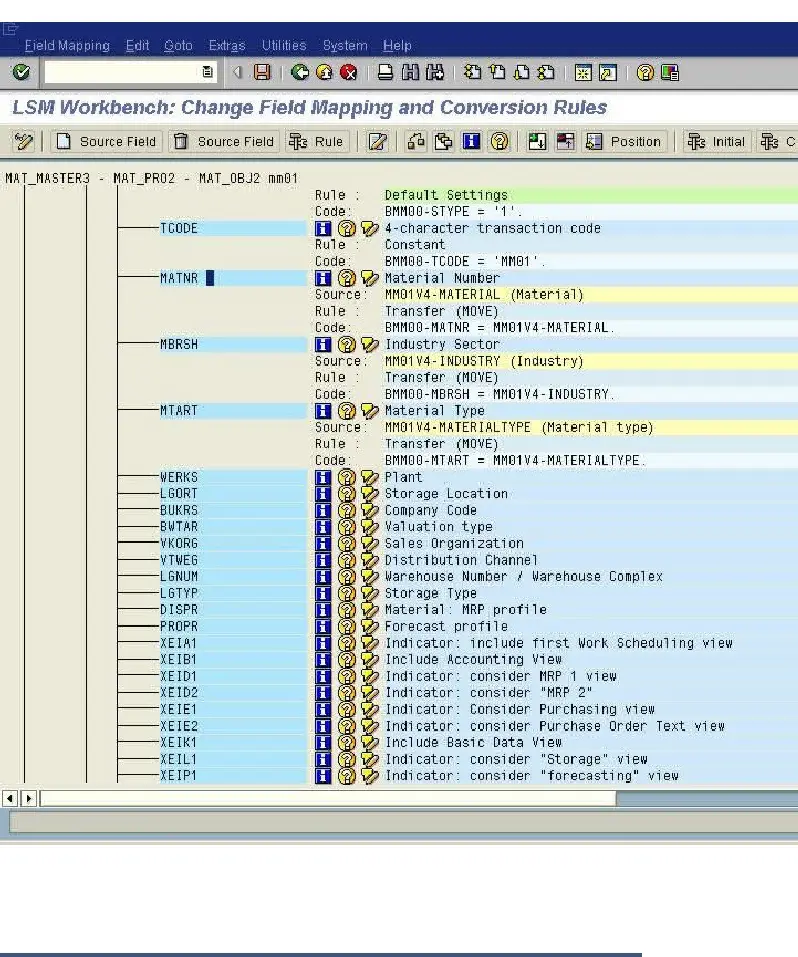

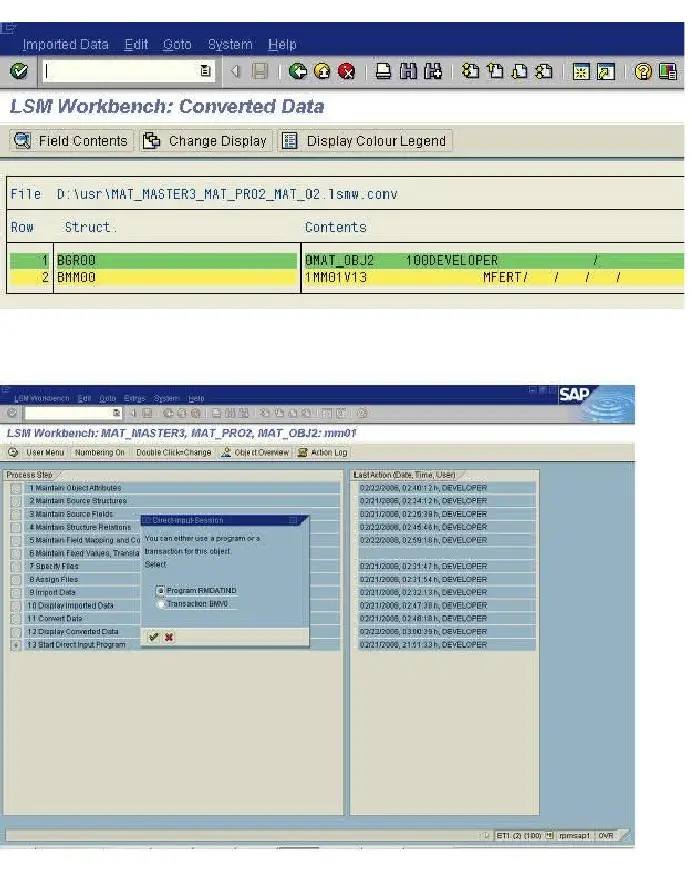

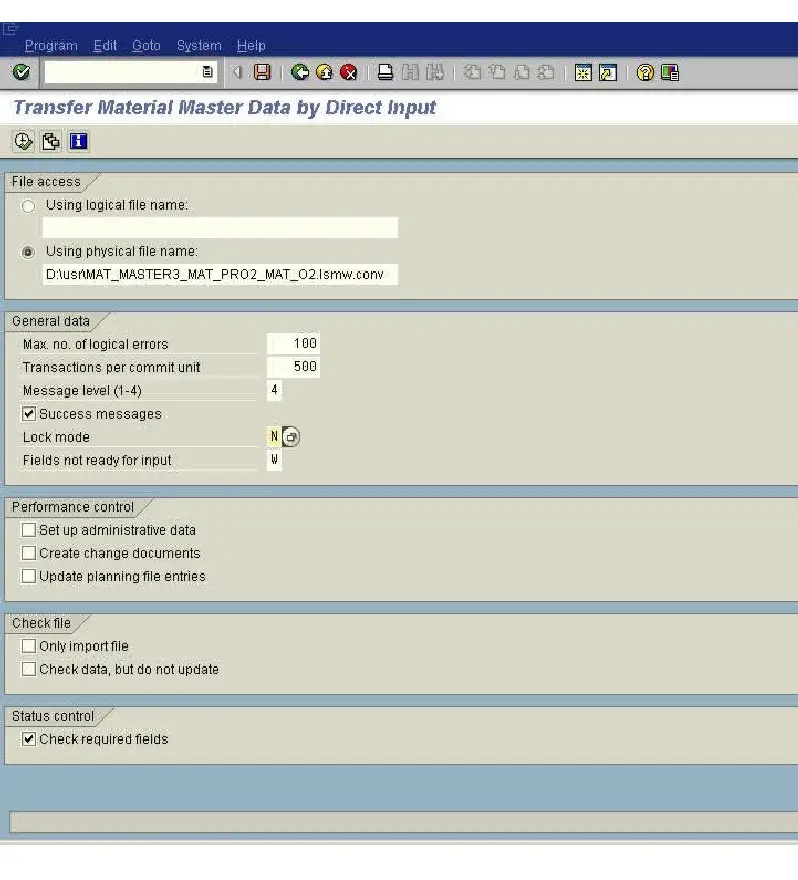

The simple answer is that not all people work on S/4HANA projects.

The simple answer is that not all people work on S/4HANA projects.

Furthermore, LSMW remains active and vibrant.

Furthermore, LSMW remains active and vibrant.